- arrow_back Home

- keyboard_arrow_right Highlights

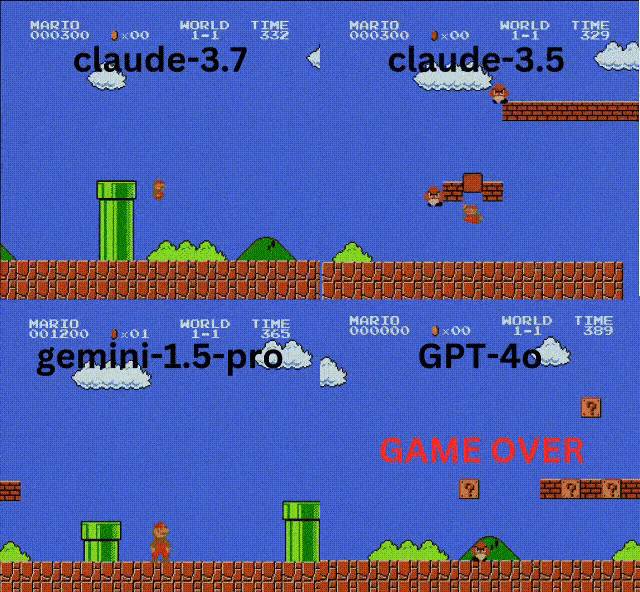

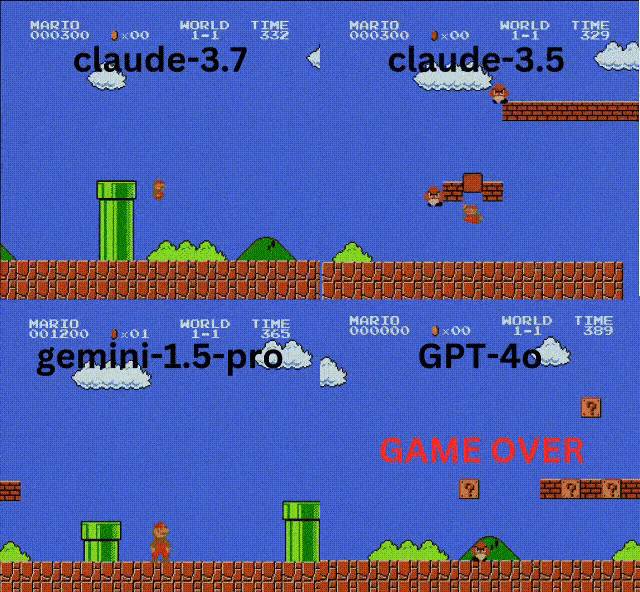

Benchmarking AI with Super Mario?

HighlightsJournal 36 Ömer Yakabagi March 4

Thinking Pokemon was a tough benchmark for AI?

Researchers at Hao AI Lab threw top AI models into the Mushroom Kingdom to see who could handle the pressure. Claude 3.7 crushed it, followed by Claude 3.5.

Meanwhile, Google’s Gemini 1.5 Pro & OpenAI’s GPT-4o? Struggling like a noob on World 1-1.

The catch? This wasn’t your grandma’s 1985 Mario.

The game ran in an emulator using GamingAgent, a framework that let AI control Mario by spitting out Python commands. It got simple instructions like “jump to dodge” and real-time screenshots—but no cheat codes.

Here’s where it gets wild: AIs built for deep reasoning played worse than their more instinctive counterparts.

Why? Overthinking kills. In a game where milliseconds separate a perfect jump from a pitfall, slow decision-making is a death sentence.

Gaming has been an AI testing ground for years, but some, like OpenAI’s Andrej Karpathy, are questioning if it proves anything. Real life isn’t an infinite-loop emulator.

SO, watching AI struggle through Mario levels? That’s entertainment.

Why Games Might Not Be the Best AI Benchmark

AI crushing games like Dota 2 & StarCraft II looks impressive, but does it mean anything?

OpenAI, once deep in gaming AI, is stepping away, and for good reason—beating games doesn’t equal intelligence.

The problem?

Games offer infinite training data, fixed rules, and clear rewards—nothing like the messy, unpredictable real world. AI that dominates Dota 2 or Go can’t adapt to new challenges or think beyond its coded objectives. Even OpenAI Five, which mastered Dota 2, only handled 16 characters—players quickly found ways to exploit its weaknesses.

Experts argue AI should be tested in more dynamic environments—like language, collaboration, and real-world problem-solving. Sure, gaming AI is cool, but winning at chess doesn’t mean you’re a genius; it just means you’re good at chess.

Source: TechCrunch & VentureBeat